Ollama 部署本地大模型

Ollama 的优势

- 轻量级

- 本地化

- 多模型支持

安装 ollama

在 Ollama 下载安装

或者通过 brew 安转

brew install ollama --cask

部署 Deepseek-R1

ollama run deepseek-r1:32b

部署 RAGFLOW

IMPORTANT

- While we also test RAGFlow on ARM64 platforms, we do not maintain RAGFlow Docker images for ARM. However, you can build an image yourself on a linux/arm64 or darwin/arm64 host machine as well.

- For ARM64 platforms, please upgrade the xgboost version in pyproject.toml to 1.6.0 and ensure unixODBC is properly installed.

RAGFlow 没有提供 ARM 平台的 Docker 镜像,需要自行本地构建

构建过程参考 Build RAGFlow Docker image | RAGFlow

IMPORTANT

- While we also test RAGFlow on ARM64 platforms, we do not maintain RAGFlow Docker images for ARM. However, you can build an image yourself on a linux/arm64 or darwin/arm64 host machine as well.

- For ARM64 platforms, please upgrade the xgboost version in pyproject.toml to 1.6.0 and ensure unixODBC is properly installed.

| |

国内的话,可以先修改一下

Dockerfile中的ARG NEED_MIRROR可以把 0 修改成 1 ,使用国内的软件源。修改

docker/.env的RAGFLOW_IMAGE配置,值改成构建好的 镜像名ragflow:nightly运行服务

1 2cd docker docker compose -f docker-compose-macos.yml up -d构建完成以后,可以访问

127.0.0.1:80来运行 RAGFlow

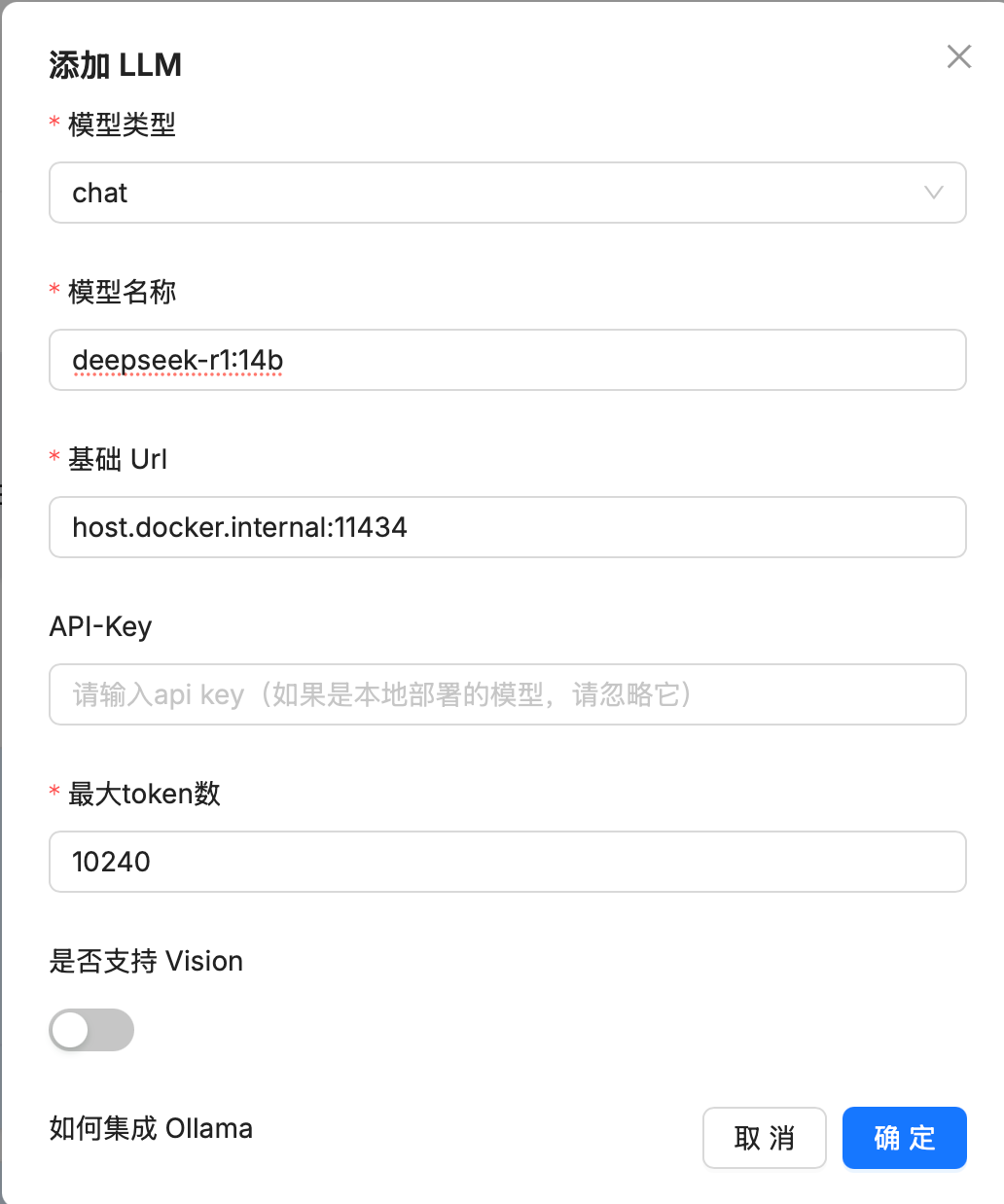

配置 RAGFlow ATTACH

配置本地模型文件的时候需要注意下

RAGFlow 是通过 bridge 方式桥接的,访问宿主服务,使用 host.docker.internal:port 可以访问到宿主服务